| Parameter name | Meaning |

| Article topic: | Scalability |

| Rubric (thematic category) | Computers |

Scalability is the ability to increase the number and power of processors, the volume of operational and external memory and other CS resources. Scalability must be ensured by the architecture and design of the computer, as well as related software tools.

So, for example, the ability to scale a cluster is limited by the ratio of processor speed to communication speed, which should not be too large (in reality, this ratio for large systems should not be more than 3-4, otherwise it is not even possible to implement a single operating system image mode ). On the other hand, the last 10 years of the history of the development of processors and communicators show that the speed gap between them is ever increasing. Each new processor added to a truly scalable system should provide predictable increases in performance and throughput at an acceptable cost. One of the basic tasks when building scalable systems is to minimize the cost of computer expansion and simplify planning. Ideally, adding processors to a system should lead to a linear increase in its performance. However, this is not always the case. Performance losses can occur, for example, when there is insufficient bus bandwidth due to increased traffic between processors and main memory, as well as between memory and I/O devices. In reality, the actual performance gain is difficult to estimate in advance because it largely depends on the dynamics of the application behavior.

The ability to scale a system is determined not only by the hardware architecture, but also depends on the properties of the software. Software scalability affects all levels, from simple message passing mechanisms to complex objects such as transaction monitors and the entire application system environment. In particular, the software must minimize interprocessor traffic, which can impede the linear growth of system performance. Hardware (processors, buses, and I/O devices) are only part of the scalable architecture on which software can deliver predictable performance gains. It is important to understand that, for example, simply upgrading to a more powerful processor can overload other system components. This means that a truly scalable system must be balanced in all respects.

Scalability - concept and types. Classification and features of the “Scalability” category 2017, 2018.

Bit rate scalability, known as inline encoding, is a highly desirable feature. The regular audio encoder version 1 supports scalability in large increments, where basic level data stream can be combined with one or more... .

Bit rate scalability, known as inline encoding, is a highly desirable feature. The regular audio encoder version 1 supports scalability in large increments, where a basic data stream layer can be combined with one or more...

Let's imagine that we have made a website. The process was exciting and it was great to see the number of visitors increase.

But at some point, traffic starts to grow very slowly, someone published a link to your application on Reddit or Hacker News, something happened to the project’s source code on GitHub, and in general, everything seemed to go against you.

On top of that, your server has crashed and can’t handle the ever-increasing load. Instead of acquiring new clients and/or regular visitors, you are left with nothing and, moreover, an empty page.

All your efforts to resume work are in vain - even after a reboot, the server cannot cope with the flow of visitors. You are losing traffic!

No one can foresee traffic problems. Very few people engage in long-term planning when working on a potentially high-return project to meet a fixed deadline.

How then can you avoid all these problems? To do this, you need to solve two issues: optimization and scaling.

Optimization

First of all, you should update to latest version PHP (current version 5.5, uses OpCache), index the database and cache static content (rarely changing pages like About , FAQ and so on).

Optimization affects more than just caching static resources. It is also possible to install an additional non-Apache server (for example, Nginx) specifically designed for processing static content.

The idea is this: you put Nginx in front of your Apache server (Ngiz will be the frontend server and Apache the backend), and task it with intercepting requests for static resources (i.e. *.jpg, *.png, *. mp4 , *.html ...) and their service WITHOUT SENDING a request to Apache.

This scheme is called reverse proxy (it is often mentioned together with the load balancing technique, which is described below).

Scaling

There are two types of scaling - horizontal and vertical.

We say that a site is scalable when it can handle increased load without the need for software changes.

Vertical scaling

Imagine that you have a web server serving a web application. This server has the following characteristics 4GB RAM, i5 CPU and 1TB HDD.

It does its job well, but to better handle the increasing traffic, you decide to replace the 4GB RAM with 16GB, install a new i7 CPU, and add hybrid PCIe SSD/HDD storage.

The server has now become more powerful and can withstand increased loads. This is what is called vertical scaling or “ scaling in depth"- you improve the characteristics of the car to make it more powerful.

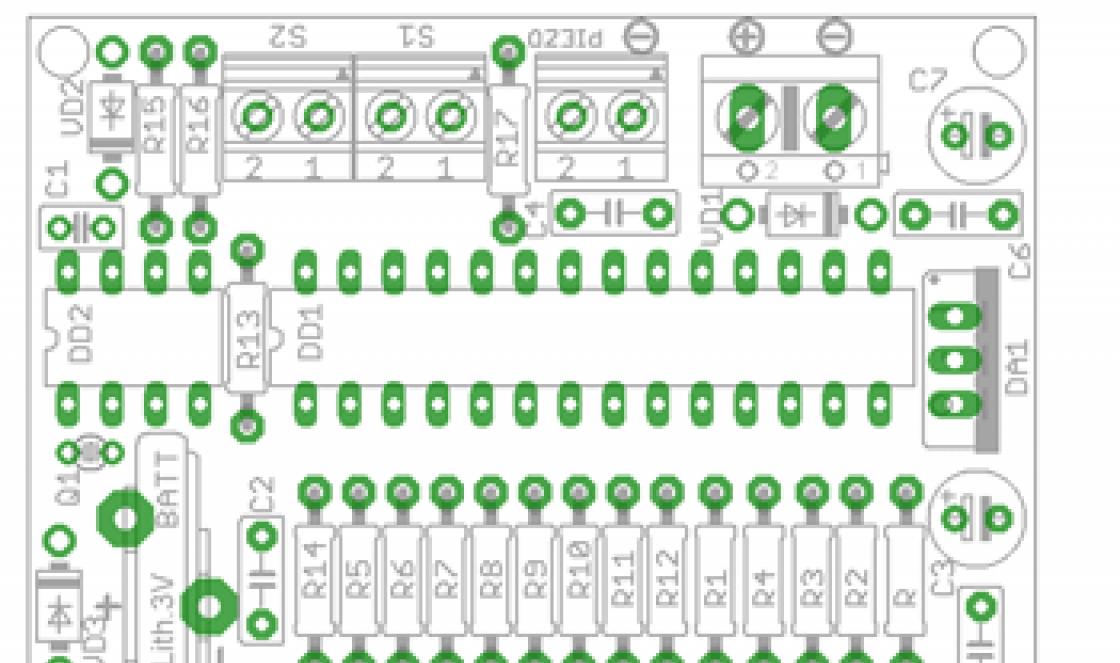

This is well illustrated in the image below:

Horizontal scaling

On the other hand, we have the opportunity to scale horizontally. In the example above, the cost of upgrading the hardware is unlikely to be less than the cost of the initial cost of purchasing a server computer.

This is very financially expensive and often does not give the effect we expect - most scaling problems relate to parallel execution of tasks.

If the number of processor cores is not enough to execute the available threads, then it does not matter how powerful the CPU is - the server will still run slowly and keep visitors waiting.

Horizontal scaling involves building clusters of machines (often quite low-power) linked together to serve a website.

In this case, a load balancer is used - a machine or program that determines which cluster the next incoming request should be sent to.

And the machines in the cluster automatically share the task among themselves. In this case, your site's throughput increases by an order of magnitude compared to vertical scaling. This is also known as " scaling breadth».

There are two types of load balancers - hardware and software. A software balancer is installed on a regular machine and accepts all incoming traffic, redirecting it to the appropriate handler. For example, Nginx can act as a software load balancer.

It accepts requests for static files and serves them itself without burdening Apache. Another popular soft balancing software is Squid, which I use at my company. It provides complete control over all possible issues through a very user-friendly interface.

Hardware balancers are a separate special machine that performs exclusively the task of balancing and on which, as a rule, no other software is installed. The most popular models are designed to handle huge amounts of traffic.

When scaling horizontally, the following happens:

Note that the two scaling methods described are not mutually exclusive - you can improve the hardware characteristics of the machines (also called nodes) used in a scale-out cluster system.

In this article we will focus on horizontal scaling, since in most cases it is preferable (cheaper and more efficient), although it is more difficult to implement from a technical point of view.

Difficulties with data separation

There are several sticky points that arise when scaling PHP applications. The bottleneck here is the database (we'll talk more about this in Part 2 of this series).

Also, problems arise with managing session data, since having logged in on one machine, you will find yourself unauthorized if the balancer transfers you to another computer the next time you request. There are several ways to solve this problem - you can transfer local data between machines, or use a persistent load balancer.

Persistent Load Balancer

A persistent load balancer remembers where the previous request of a particular client was processed and, on the next request, sends the request there.

For example, if I visited our site and logged in there, then the load balancer redirects me to, say, Server1, remembers me there, and the next time I click, I will be redirected to Server1 again. All this happens completely transparently to me.

But what if Server1 crashed? Naturally, all session data will be lost, and I will have to log in again on a new server. This is very unpleasant for the user. Moreover, this is an extra load on the load balancer: it will not only need to redirect thousands of people to other servers, but also remember where it redirected them.

This becomes another bottleneck. What if the only load balancer itself fails and all information about the location of clients on the servers is lost? Who will manage the balancing? It's a complicated situation, isn't it?

Local Data Sharing

Sharing session data within a cluster definitely seems like a good solution, but requires changes to the application architecture, although it's worth it because the bottleneck becomes wide. The failure of one server ceases to have a fatal effect on the entire system.

It is known that session data is stored in the super-global PHP array $_SESSION . Also, it’s no secret that this $_SESSION array is stored on the hard drive.

Accordingly, since the disk belongs to one or another machine, others do not have access to it. Then how can we organize it? general access for several computers?

Note that session handlers in PHP can be overridden - you can define your own class/function to manage sessions.

Using the Database

By using our own session handler, we can be sure that all session information is stored in the database. The database must be on a separate server (or in its own cluster). In this case, evenly loaded servers will only process business logic.

While this approach works quite well, if there is a lot of traffic, the database becomes not only vulnerable (if you lose it, you lose everything), it will be subject to a lot of access due to the need to write and read session data.

This becomes another bottleneck in our system. In this case, you can apply breadth scaling, which is problematic when using traditional databases like MySQL, Postgre and the like (this problem will be covered in the second part of the series).

Using a shared file system

You can set up a network file system that all servers will access and work with session data. You shouldn't do that. This is a completely ineffective approach, with a high probability of data loss, and it all works very slowly.

This is another potential danger, even more dangerous than the database case described above. Activating the shared file system is very simple: change the value of session.save_path in the php.ini file, but it is strongly recommended to use another method.

If you still want to implement the shared file system option, then there are much more best solution- GlusterFS.

Memcached

You can use memcached to store session data in RAM. This is a very insecure method, since session data will be overwritten as soon as free disk space runs out.

There is no persistence - login data will be stored as long as the memcached server is running and there is free space to store this data.

You might be wondering - isn't RAM specific to each machine? How to apply this method to a cluster? Memcached has the ability to virtually combine all available RAM of multiple machines into a single storage:

The more machines you have, the larger the size of the shared storage created. You don't have to manually allocate memory within storage, but you can control the process by specifying how much memory can be allocated from each machine to create a shared space.

Thus, the required amount of memory remains at the disposal of computers for their own needs. The rest is used to store session data for the entire cluster.

In addition to sessions, the cache can also contain any other data you desire, the main thing is that there is enough free space. Memcached is an excellent solution that has become widely used.

Using this method in PHP applications is very easy: you just need to change the value in the php.ini file:

session.save_handler = memcache session.save_path = "tcp://path.to.memcached.server:port"

Redis Cluster

Redis is a non-SQL, in-memory data store like Memcached, but it is persistent and supports more complex data types than just PHP array strings in the form of “key => value” pairs.

This solution does not support clusters, so implementing it in a horizontal scaling system is not as simple as it might seem at first glance, but it is quite doable. In fact, the alpha version of the cluster version is already out and you can use it.

When compared to solutions like Memcached, Redis is a cross between a regular database and Memcached.

Other solutions:

- ZSCM from Zend – good alternative, but requires installation of Zend Server on each node in the cluster;

- Other non-SQL storage and caching systems are also quite workable - check out Scache, Cassandra or Couchbase, they are all fast and reliable.

Conclusion

As you can see from the above, horizontal scaling of PHP applications is not a weekend picnic.

In general, scalability is defined as the ability of a computing system to efficiently handle an increase in the number of users or supported resources without losing performance or increasing the administrative burden of managing it. In this case, a system is called scalable if it is able to increase its performance when adding new hardware. In other words, scalability refers to the ability of a system to grow as the load on it increases.

Scalability is an important property of computing systems that may need to operate under heavy load, because it means that you do not have to start from scratch and create an entirely new information system. If you have a scalable system, you can most likely keep the same software by simply adding more hardware.

For distributed systems, several parameters are usually identified that characterize their scale: the number of users and the number of components that make up the system, the degree of territorial remoteness network computers systems from each other and the number of administrative organizations serving parts of the distributed system. Therefore, the scalability of distributed systems is also determined in the corresponding areas:

Load scalability. The ability of a system to increase its performance as the load increases by replacing existing hardware components with more powerful ones or by adding new hardware. In this case, the first case of increasing the performance of each component of the system in order to increase overall performance is called

vertical scaling , and the second, expressed in an increase in the number of network computers (servers)

distributed system – horizontal scaling.

Geographic scalability. The ability of a system to maintain its basic characteristics, such as performance, simplicity, and ease of use, when its components are distributed geographically from a more local relative location to a more distributed one.

Administrative scalability. Characterizes the ease of system management with an increase in the number of administratively independent organizations serving parts of one distributed system.

Scaling difficulties. Building scalable systems involves solving a wide range of problems and often faces the limitations of those implemented in computing systems

centralized services, data and algorithms . Namely, many services are centralized in the sense that they are implemented as a single process and can only run on one computer (the server). The problem with this approach is that as the number of users or applications using the service increases, the server running it will become a bottleneck and limit overall performance. Even if we assume the possibility of an unlimited increase in the power of such a server (vertical scaling), then the limiting factor will be the throughput of the communication lines connecting it with the remaining components of the distributed system. Likewise, data centralization requires centralized processing, leading to the same limitations. An example of the benefits of a decentralized approach is the Domain Name Service. Domain Name Service, DNS ), which is one of the largest distributed naming systems today. The DNS service is used primarily for lookup IP addresses By domain name and processes millions of requests from computers around the world. In this case, the distributed DNS database is maintained using a hierarchy DNS servers, interacting according to a specific protocol. If all DNS data were centrally stored on a single server, and every request to interpret a domain name was sent to that server, it would be impossible to use such a system worldwide.

Separately, it is worth noting the limitations created by the use of centralized algorithms. The fact is that centralized algorithms for their work require receiving all the input data and only after that they perform the appropriate operations on them, and only then distribute the results to all interested parties. From this point of view, the problems of using centralized algorithms are equivalent to the problems of centralizing services and data discussed above. Therefore, to achieve good scalability, you should use distributed algorithms, providing for parallel execution of parts of the same algorithm by independent processes.

Unlike centralized algorithms, distributed algorithms have the following properties that actually make them much more difficult to design and implement:

Lack of global state knowledge . As already said,

centralized algorithms have complete information about the state of the entire system and determine the next actions based on

from its current state. In turn, each process that implements part of a distributed algorithm has direct access only to its own state, but not to the global state of the entire system. Accordingly, processes make decisions only based on their local information. It should be noted that each process can obtain information about the state of other processes in a distributed system only from incoming messages, and this information may be out of date at the time of receipt. A similar situation occurs in astronomy: knowledge about the object being studied (star/galaxy) is formed on the basis of light and other electromagnetic radiation, and this radiation gives an idea of the state of the object in the past. For example, knowledge about an object located at a distance of five thousand light years is five thousand years out of date.

Lack of common unified time . Events that make up the progress of the centralized algorithm completely organized : For any pair of events, we can confidently say that one of them happened before the other. When executing a distributed algorithm, due to the absence of a uniform time for all processes, events cannot be considered completely ordered: for some pairs of events we can say which of them occurred before the other, for others - not.

Lack of determinism. A centralized algorithm is most often defined as a strictly deterministic sequence of actions that describes the process of transforming an object from an initial state to a final state. Thus, if we run a centralized algorithm with the same set of input data, we will get the same result and the same sequence of transitions from state to state. In turn, the execution of a distributed algorithm is non-deterministic due to the independent execution of processes at different and unknown speeds, as well as due to random delays in the transmission of messages between them. Therefore, despite the fact that the concept of a global state can be defined for distributed systems, the execution of a distributed algorithm can only be considered to a limited extent as a transition from one global state to another, because for the same algorithm, execution can be described by another sequence of global states. Such alternative sequences usually consist of other global states, and so there is little point in talking about

that a particular state is achieved during the execution of a distributed algorithm.

Failure Tolerance. A failure in any of the processes or communication channels should not cause disruption to the distributed algorithm.

Achieving geographic scalability requires different approaches. One of the main reasons for the poor geographic scalability of many distributed systems designed for local networks is that they are based on the principle

synchronous communication ). In this type of communication, the client calling any server service is blocked until a response is received. This works well when interaction between processes occurs quickly and unnoticed by the user. However, as the latency to access the remote service increases, global system This approach is becoming less and less attractive and, very often, completely unacceptable.

Another difficulty in ensuring geographic scalability is that communication in global networks is inherently unreliable and the interaction of processes is almost always point-to-point. In turn, the connection in local networks is highly reliable and involves the use of broadcast messages, which greatly simplifies the development of distributed applications. For example, if a process needs to discover the address of another process that provides a particular service, on local networks it only needs to send out a broadcast message asking the searched process to respond to it. All processes receive and process this message. But only the process that provides the required service responds to the request received by indicating its address in the response message. Obviously, such interaction overloads the network, and it is unrealistic to use it in global networks.

Scaling technologies. In most cases, scaling difficulties manifest themselves in problems with the efficiency of distributed systems, caused by the limited performance of its individual components: servers and network connections. There are several basic technologies that can reduce the load on each component of a distributed system. Such technologies usually include spreading(English distribution),

replication (English replication) and caching (English caching).

Distribution involves breaking the set of supported resources into parts and then distributing those parts across system components. A simple example of distribution would be a distributed file system provided that

Each file server serves its own set of files from a common address space. Another example would be the already mentioned Domain Name System (DNS), in which the entire DNS namespace is divided into zones, and the names of each zone are served by a separate DNS server.

Replication and caching play an important role in ensuring scalability. Replication not only improves resource availability in the event of a partial failure, but also helps balance the load between system components, thereby increasing performance. Caching is a special form of replication where a copy of a resource is created in close proximity to the user using that resource. The only difference is that replication is initiated by the owner of the resource, and caching is initiated by the user when accessing this resource. However, it is worth noting that having multiple copies of a resource leads to other complications, namely the need to provide them consistency(English consistency), which, in turn, can negatively affect the scalability of the system.

Thus, propagation and replication allow requests entering the system to be distributed across multiple components, while caching reduces the number of repeated calls to the same resource.

Caching is designed not only to reduce the load on the components of a distributed system, but also to hide communication delays from the user when accessing remote resources. Technologies such as these, which hide communication delays, are important to achieve geographic scalability of the system. These, in particular, also include asynchronous communication mechanisms (eng. asynchronous communication), in which the client is not blocked when accessing a remote service, but is able to continue its work immediately after the access. Later, when a response is received, the client process can interrupt and call a special handler to complete the operation.

However, asynchronous communication is not always applicable. For example, in interactive applications the user is forced to wait for the system to respond. In such cases, you can use code transfer technologies, when part of the application code is loaded on the client side and executed locally to ensure a quick response to user actions. The advantage of such solutions is that they reduce the number of network interactions and reduce the application's dependence on random delays in the exchange of messages over the network. Code porting is now widely used on the Internet in the form of Java and Javascript applets.

Single information systems are implemented, as a rule, on a stand-alone personal computer (the network is not used). Such a system may contain several simple applications connected by a common information fund, and is designed for the work of one user or a group of users sharing one workplace. Such applications are created using so-called desktop, or local, database management systems (DBMS). Among local DBMSs, the most famous are Clarion, Clipper, FoxPro, Paradox, dBase and Microsoft Access.

Group information systems are focused on the collective use of information by members of a work group and are most often built on the basis of a local computer network. When developing such applications, database servers (also called SQL servers) are used for workgroups. There are quite a large number of different SQL servers, both commercial and freely distributed. Among them, the most famous database servers are Oracle, DB2, Microsoft SQL Server, InterBase, Sybase, Informix.

Corporate information systems are a development of systems for workgroups, they are aimed at large companies and can support geographically dispersed nodes or networks. Basically they have a hierarchical structure of several levels. Such systems are characterized by a client-server architecture with specialization of servers or a multi-level architecture. When developing such systems, the same database servers can be used as when developing group information systems. However, in large information systems, the most widely used servers are Oracle, DB2 and Microsoft SQL Server. For group and corporate systems, the requirements for reliable operation and data security are significantly increased. These properties are provided by maintaining the integrity of data, references, and transactions in database servers.

Centralized or distributed database.

Design FeaturesWeb-oriented systems at present

Scrum is a set of principles on which the development process is built, allowing in strictly fixed short periods of time ( sprints from 2 to 4 weeks) provide the end user with working software with new features that have the highest priority. Software capabilities for implementation in the next sprint are determined at the beginning of the sprint at the planning stage and cannot change throughout its entire duration. At the same time, the strictly fixed short duration of the sprint gives the development process predictability and flexibility.

ORM is a programming technology that links databases with the concepts of object-oriented programming languages, creating a "virtual object database".

Problem: In object-oriented programming, objects in a program represent objects in the real world. The essence of the problem is to transform such objects into a form in which they can be stored in files or databases, and which can be easily retrieved later, while preserving the properties of the objects and the relationships between them. These objects are called "stored" objects. persistent). Historically, there have been several approaches to solving this problem.

Model-view-controller- a software architecture in which the application data model, user interface, and control logic are separated into three separate components such that modification to one component has minimal impact on the other components.

A lot of words have already been said on this topic both on my blog and beyond. It seems to me that the moment has come to move from the specific to the general and try to look at this topic separately from any successful implementation of it.

Shall we get started?

To begin with, it makes sense to decide what we are going to talk about. In this context, the web application has three main goals:

- scalability- the ability to respond in a timely manner to continuous load growth and unexpected influxes of users;

- availability- providing access to the application even in case of emergency;

- performance- even the slightest delay in loading a page can leave a negative impression on the user.

The main topic of conversation will be, as you might guess, scalability, but I don’t think the other goals will remain aside. I would like to immediately say a few words about accessibility, so as not to return to this later, meaning as “it goes without saying”: any site one way or another strives to function as stably as possible, that is, to be accessible to absolutely all of its potential visitors at absolutely every moment time, but sometimes all sorts of unforeseen situations happen that can cause temporary unavailability. To minimize the potential impact on the availability of the application, it is necessary to avoid having components in the system whose potential failure would lead to the unavailability of any functionality or data (or at least the site as a whole). Thus, each server or any other component of the system must have at least one backup (it does not matter in what mode they will work: in parallel or one “backs up” the other while in passive mode), and the data must be replicated in at least two copies (and preferably not at the RAID level, but on different physical machines). Storing multiple backup copies of data somewhere separate from the main system (for example, on special services or on a separate cluster) will also help avoid many problems if something goes wrong. Don’t forget about the financial side of the issue: insurance in case of failures requires additional significant investments in equipment, which it makes sense to try to minimize.

Scalability is usually divided into two areas:

Vertical scalability Increasing the performance of each system component to improve overall performance. Horizontal scalability Splitting the system into smaller structural components and distributing them across separate physical machines (or groups of them) and/or increasing the number of servers performing the same function in parallel.One way or another, when developing a system growth strategy, you have to look for a compromise between price, development time, final performance, stability and a host of other criteria. From a financial point of view, vertical scalability is far from the most attractive solution, because prices for servers with a large number of processors always grow almost exponentially relative to the number of processors. This is why the horizontal approach is most interesting, since it is the one that is used in most cases. But vertical scalability sometimes has a right to exist, especially in situations where the main role is played by time and speed of solving a problem, and not by a financial issue: after all, buying a BIG server is much faster than practically developing applications from scratch, adapting it to work on a large number of parallel servers running servers.

With the generalities out of the way, let's move on to an overview of potential problems and solutions when scaling horizontally. Please do not criticize too much - I don’t claim absolute correctness and reliability, I’m just “thinking out loud”, and I definitely won’t be able to even mention all the points on this topic.

Application Servers

In the process of scaling the applications themselves, problems rarely arise if during development you always keep in mind that each instance of the application should be directly in no way connected with its “colleagues” and should be able to process absolutely any user request, regardless of where previous requests were processed given user and what exactly does he want from the application as a whole at the moment.

Further, ensuring the independence of each individual running application, it is possible to process more and more requests per unit of time simply by increasing the number of parallel operating application servers participating in the system. Everything is quite simple (relatively).

Load Balancing

The next task is to evenly distribute requests among the available application servers. There are many approaches to solving this problem and even more products that offer their specific implementation.

Equipment Network equipment that allows you to distribute the load between several servers usually costs quite a significant amount, but among other options, this approach usually offers the highest performance and stability (mainly due to quality, plus such equipment sometimes comes in pairs, working according to the principle). There are quite a lot of serious brands in this industry offering their solutions - there is plenty to choose from: Cisco, Foundry, NetScalar and many others. Software There is an even greater variety of possible options in this area. It is not so easy to obtain software performance comparable to hardware solutions, and HeartBeat will have to be provided in software, but the equipment for the operation of such a solution is a regular server (possibly more than one). There are quite a lot of such software products, usually they are simply HTTP servers that redirect requests to their colleagues on other servers instead of sending them directly to the programming language interpreter for processing. For example, you can mention, say, mod_proxy. In addition, there are more exotic options based on DNS, that is, in the process of determining by the client the IP address of the server with the Internet resources it needs, the address is issued taking into account the load on the available servers, as well as some geographical considerations.Each option has its own range of positive and negative aspects, which is precisely why there is no unambiguous solution to this problem - each option is good in its own specific situation. Do not forget that no one limits you to using only one of them; if necessary, an almost arbitrary combination of them can easily be implemented.

Resource-intensive computing

Many applications use some complex mechanisms, this could be converting video, images, sound, or simply performing some resource-intensive calculations. Such tasks require special attention if we are talking about the Web, since a user of an Internet resource is unlikely to be happy watching a page load for several minutes, waiting only to see a message like: “The operation was completed successfully!”

To avoid such situations, you should try to minimize the performance of resource-intensive operations synchronously with the generation of Internet pages. If a particular operation does not affect new page sent to the user, you can simply organize queue tasks that need to be completed. In this case, at the moment when the user has completed all the actions necessary to start the operation, the application server simply adds a new task to the queue and immediately begins generating the next page without waiting for the results. If the task is actually very labor-intensive, then such a queue and job handlers can be located on a separate server or cluster.

If the result of the operation is involved in the next page sent to the user, then when it is executed asynchronously, you will have to cheat a little and somehow distract the user while it is being executed. For example, if we are talking about converting video to flv, then, for example, you can quickly generate a screenshot with the first frame in the process of compiling a page and substitute it in place of the video, and dynamically add the viewing option to the page after the conversion is completed.

Another good method of handling such situations is to simply ask the user to "come back later." For example, if the service generates screenshots of websites from different browsers in order to demonstrate the correctness of their display to owners or those simply interested, then generating a page with them can take not even seconds, but minutes. The most convenient for the user in such a situation would be to offer to visit the page at the specified address in so many minutes, and not wait for an indefinite period of time by the sea for weather.

Sessions

Almost all web applications interact in some way with their visitors, and in the vast majority of cases there is a need to track user movements across site pages. To solve this problem, a mechanism is usually used sessions, which consists of assigning each visitor a unique identification number, which is transferred to him for storage in cookies or, in their absence, for constant “dragging” along with him via GET. Having received a certain ID from the user along with the next HTTP request, the server can look at the list of already issued numbers and unambiguously determine who sent it. Each ID can be associated with a certain set of data that the web application can use at its discretion; this data is usually stored by default in a file in a temporary directory on the server.

It would seem that everything is simple, but... but requests from visitors to the same site can be processed by several servers at once, how then can one determine whether the received ID was issued on another server and where its data is generally stored?

The most common solutions are centralization or decentralization of session data. A somewhat absurd phrase, but hopefully a couple of examples can clear things up:

Centralized session storage The idea is simple: create a common “piggy bank” for all servers, where they can put the sessions they issue and learn about the sessions of visitors to other servers. Theoretically, the role of such a “piggy bank” could be simply a file system mounted over the network, but for some reasons the use of some kind of DBMS looks more promising, since this eliminates a lot of problems associated with storing session data in files. But in the version with common base data, do not forget that the load on it will steadily increase with the increase in the number of visitors, and it is also worthwhile to provide in advance options for solving problematic situations associated with potential failures in the operation of the server with this DBMS. Decentralized session storage A good example is storing sessions in , a system initially designed for distributed data storage in RAM will allow all servers to quickly access any session data, but at the same time (unlike previous method) any one-stop center there will be no storage for them. This will avoid bottlenecks in terms of performance and stability during periods of increased load.As an alternative to sessions, mechanisms similar in purpose are sometimes used, built on cookies, that is, all user data needed by the application is stored on the client side (probably in encrypted form) and requested as needed. But in addition to the obvious advantages associated with not having to store unnecessary data on the server, a number of security problems arise. Data stored on the client side, even in encrypted form, poses a potential threat to the functioning of many applications, since anyone can try to modify it for their own benefit or in order to harm the application. This approach is only good if there is confidence that absolutely any manipulation of data stored by users is safe. But is it possible to be 100% sure?

Static content

While the volumes of static data are small - no one bothers to store them in the local file system and provide access to them simply through a separate lightweight web server like (I mean mainly different forms of media data), but sooner or later the server limits on disk space or The file system limit on the number of files in one directory will be reached, and you will have to think about redistributing the content. A temporary solution might be to distribute data by type across different servers, or perhaps use a hierarchical directory structure.

If static content plays one of the main roles in the operation of the application, then it is worth thinking about using a distributed file system to store it. This is perhaps one of the few ways to horizontally scale the amount of disk space by adding additional servers without making any fundamental changes to the operation of the application itself. I don’t want to give any advice on which cluster file system to choose right now; I have already published more than one review of specific implementations - try reading them all and comparing, if this is not enough, the rest of the Network is at your disposal.

Perhaps this option will not be feasible for some reason, then you will have to “reinvent the wheel” to implement at the application level principles similar to data segmentation in relation to a DBMS, which I will mention later. This option is also quite effective, but requires modification of the application logic, which means additional work for developers.

An alternative to these approaches is the use of so-called Content Delivery Network- external services that ensure the availability of your content to users for a certain material reward to the service. The advantage is obvious - there is no need to organize your own infrastructure to solve this problem, but another additional cost item appears. I won’t give a list of such services, but if someone needs it, it won’t be difficult to find.

Caching

Caching makes sense at all stages of data processing, but in different types applications, only certain caching methods are most effective.

DBMS Almost all modern DBMSs provide built-in mechanisms for caching results certain requests. This method is quite effective if your system regularly makes the same data samples, but it also has a number of disadvantages, the main ones being the invalidation of the cache of the entire table at the slightest change, as well as the local location of the cache, which is ineffective if there are several servers in the system data storage. Application At the application level, objects of any programming language are usually cached. This method allows you to completely avoid a significant part of queries to the DBMS, greatly reducing the load on it. Like the applications themselves, such a cache must be independent of the specific request and the server on which it is executed, that is, it must be available to all application servers at the same time, and even better, it must be distributed across several machines for more efficient utilization of RAM. One can rightfully be called a leader in this aspect of caching, which I already talked about at one time. HTTP server Many web servers have modules for caching both static content and the results of scripts. If the page is rarely updated, then using this method allows you to avoid generating the page in response to a fairly large part of requests without any changes visible to the user. Reverse proxy By placing a transparent proxy server between the user and the web server, you can provide the user with data from the proxy cache (which can be either in RAM or disk), without sending requests even to HTTP servers. In most cases, this approach is only relevant for static content, mainly various forms of media data: images, videos and the like. This allows web servers to focus only on working with the pages themselves.Caching, by its very nature, requires virtually no additional hardware costs, especially if you carefully monitor the use of RAM by other server components and utilize all available “surplus” cache forms that are most suitable for a particular application.

In some cases, cache invalidation can become a non-trivial task, but one way or another there is a universal solution for all possible problems It’s not possible to write anything related to it (at least for me personally), so let’s leave this question until better times. In general, the solution to this problem falls on the web application itself, which usually implements some kind of invalidation mechanism by means of deleting a cache object through a certain period of time after its creation or last use, or “manually” when certain events from the user or other system components.

Databases

I left the most interesting part for the appetizer, because this integral component of any web application causes more problems when the load increases than all the others combined. Sometimes it may even seem that you should completely abandon horizontal scaling of the data storage system in favor of vertical scaling - just buy that same BIG server for a six- or seven-figure sum of non-rubles and not bother yourself with unnecessary problems.

But for many projects such a radical solution (and, by and large, a temporary one) is not suitable, which means there is only one road left before them - horizontal scaling. Let's talk about her.

The path of almost any web project from a database point of view began with one simple server, on which the entire project worked. Then, at one fine moment, the need arises to move the DBMS to a separate server, but over time it also begins to not cope with the load. There is no particular point in going into detail about these two stages - everything is relatively trivial.

The next step is usually master-slave with asynchronous data replication, how this scheme works has already been mentioned several times in the blog, but perhaps I’ll repeat it: with this approach, all write operations are performed only on one server (master), and the remaining servers (slave) receive data directly from the “master”, while processing only requests to read data. As you know, the read and write operations of any web project always grow in proportion to the load, while the ratio between both types of requests remains almost fixed: for each request to update data, there is usually an average of about a dozen read requests. Over time, the load grows, which means the number of write operations per unit of time also grows, but only one server processes them, and then it also ensures the creation of a certain number of copies on other servers. Sooner or later, the costs of data replication operations will become so high that this process will begin to occupy a very large part of the processor time of each server, and each slave will be able to process only a relatively small number of read operations, and, as a result, each additional slave server will begin to increase the total productivity is only slightly, also doing for the most part only maintaining its data in accordance with the “master”.

A temporary solution to this problem may be to replace the master server with a more productive one, but one way or another it will not be possible to endlessly postpone the transition to the next “level” of data storage system development: "sharding", to which I recently dedicated. So let me dwell on it only briefly: the idea is to divide all the data into parts according to some characteristic and store each part on a separate server or cluster, such part of the data in conjunction with the data storage system in which it is located , and is called a segment or shard’om. This approach allows you to avoid costs associated with data replication (or reduce them many times), and therefore significantly increase the overall performance of the storage system. But, unfortunately, the transition to this data organization scheme requires a lot of costs of a different kind. Since there is no ready-made solution for its implementation, you have to modify the application logic or add an additional “layer” between the application and the DBMS, and all this is most often implemented by the project developers. Off-the-shelf products can only make their work easier by providing a framework for building the basic architecture of the data storage system and its interaction with the rest of the application components.

At this stage the chain usually ends, since segmented databases can scale horizontally in order to fully satisfy the needs of even the most heavily loaded Internet resources. It would be appropriate to say a few words about the actual data structure within databases and the organization of access to them, but any decisions are highly dependent on the specific application and implementation, so I will only allow myself to give a couple of general recommendations:

Denormalization Queries that combine data from multiple tables usually, all other things being equal, require more CPU time to execute than a query that affects only one table. And performance, as mentioned at the beginning of the story, is extremely important on the Internet. Logical data partitioning If some part of the data is always used separately from the bulk, then sometimes it makes sense to separate it into a separate independent data storage system. Low-level query optimization By maintaining and analyzing request logs, you can determine the slowest ones. Replacing the found queries with more efficient ones with the same functionality can help to use computing power more efficiently.In this section it is worth mentioning another, more specific, type of Internet projects. Such projects operate with data that does not have a clearly formalized structure; in such situations, the use of relational DBMS as a data storage is, to put it mildly, inappropriate. In these cases, they usually use less strict databases, with more primitive functionality in terms of data processing, but they are able to process huge volumes of information without finding fault with its quality and compliance with the format. A clustered file system can serve as the basis for such a data storage, and in this case a mechanism called is used to analyze the data; I will only briefly describe the principle of its operation, since in its full scale it is somewhat beyond the scope of this story.

So, we have at the input some arbitrary data in a format that is not necessarily correctly observed. As a result, you need to get some final value or information. According to this mechanism, almost any data analysis can be carried out in the following two stages:

Map The main goal of this stage is to represent arbitrary input data in the form of intermediate key-value pairs that have a certain meaning and are formalized. The results are sorted and grouped by key, and then transferred to the next stage. Reduce Received after map the values are used for the final calculation of the required totals.Each stage of each specific calculation is implemented as an independent mini-application. This approach allows almost unlimited parallelization of calculations on a huge number of machines, which allows you to process volumes of almost arbitrary data in an instant. All you have to do is run these applications on every available server at the same time, and then collect all the results together.

An example of a ready-made framework for implementing data processing according to this principle is the opensource project of the Apache Foundation called, which I have already talked about several times before, and even wrote about at one time.

Instead of a conclusion

To be honest, I find it hard to believe that I was able to write such a comprehensive post and had almost no energy left to sum it up. I would just like to say that in the development of large projects every detail is important, and an unaccounted for detail can cause failure. That is why in this matter you should learn not from your own mistakes, but from others.

Although this text may look like a kind of generalization of all the posts in the series, it is unlikely to become the final point, I hope I will find something to say on this topic in the future, maybe one day it will be based on personal experience, and will not simply be the result of processing the mass of information I received. Who knows?...