Brief theory

Kendall's correlation coefficient is used when variables are represented on two ordinal scales, provided that there are no associated ranks. The calculation of the Kendall coefficient involves counting the number of matches and inversions.

This coefficient varies within limits and is calculated using the formula:

For calculation, all units are ranked according to ; according to a row of another characteristic, for each rank the number of subsequent ranks exceeding the given one (we denote them by ), and the number of subsequent ranks below the given one (we denote them by ).

It can be shown that

and coefficient rank correlation Kendall can be written as

In order to test the null hypothesis at the significance level that the general Kendall rank correlation coefficient is equal to zero under a competing hypothesis, it is necessary to calculate the critical point:

where is the sample size; – critical point of the two-sided critical region, which is found from the table of the Laplace function by equality

If – there is no reason to reject the null hypothesis. The rank correlation between the characteristics is insignificant.

If – the null hypothesis is rejected. There is a significant rank correlation between the characteristics.

Example of problem solution

Problem condition

During the recruitment process, seven candidates for vacant positions were given two tests. The test results (in points) are shown in the table:

| Test | Candidate | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 1 | 31 | 82 | 25 | 26 | 53 | 30 | 29 | 2 | 21 | 55 | 8 | 27 | 32 | 42 | 26 |

Calculate the Kendall rank correlation coefficient between the test results for two tests and evaluate its significance at the level.

Problem solution

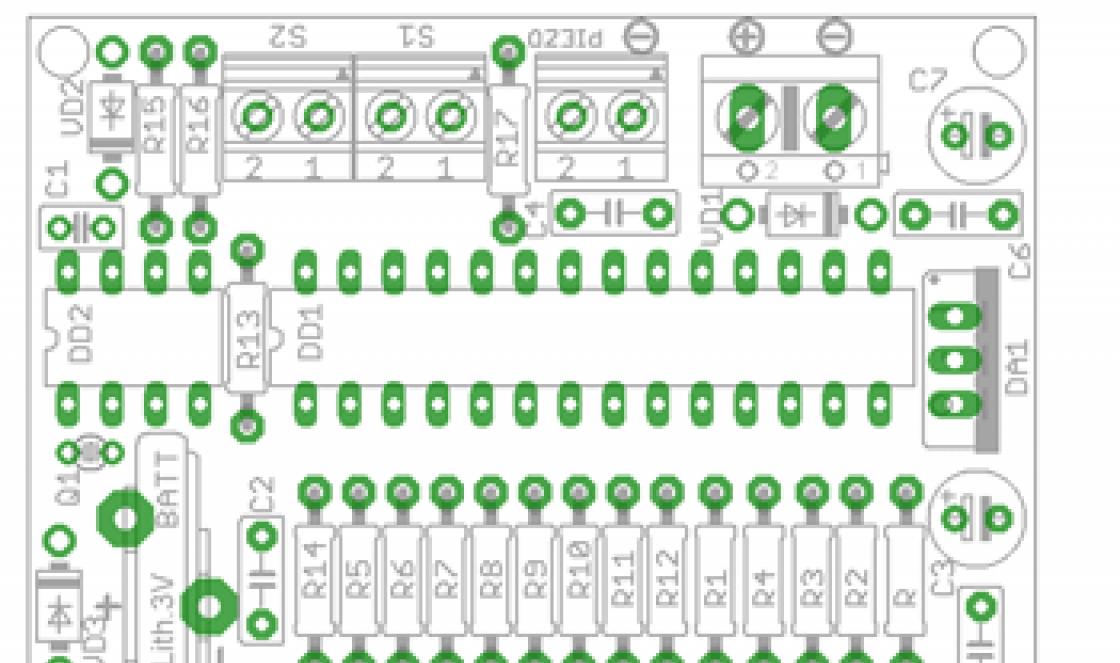

Let's calculate the Kendall coefficient

The ranks of the factor characteristic are arranged strictly in ascending order, and the corresponding ranks of the resultant characteristic are recorded in parallel. For each rank, from the number of ranks following it, the number of ranks larger than it in value is counted (entered in the column) and the number of ranks smaller in value (entered in the column).

| 1 | 1 | 6 | 0 | 2 | 4 | 3 | 2 | 3 | 3 | 3 | 1 | 4 | 6 | 1 | 2 | 5 | 2 | 2 | 0 | 6 | 5 | 1 | 0 | 7 | 7 | 0 | 0 | Sum | 16 | 5 |

KENDALL'S RANK CORRELATION COEFFICIENT

One of the sample measures of dependence of two random variables (features) Xi Y, based on the ranking of sample elements (X 1, Y x), .. ., (X n, Y n). K. k. r. thus refers to ranking statisticians and is determined by the formula

Where r i- U, belonging to that couple ( X, Y),

for cut Xequal i, S = 2N-(n-1)/2, N is the number of sample elements, for which both j>i and r j >r i. Always ![]() As a selective measure of the dependence of K. k.r. K. was widely used by M. Kendall (M. Kendall, see).

As a selective measure of the dependence of K. k.r. K. was widely used by M. Kendall (M. Kendall, see).

K. k. r. k. is used to test the hypothesis of independence of random variables. If the independence hypothesis is true, then E t =0 and D t =2(2n+5)/9n(n-1). With a small sample size, checking the statistical independence hypotheses are made using special tables (see). For n>10, use the normal approximation for the distribution m: if

then the hypothesis of independence is rejected, otherwise it is accepted. Here a . - significance level, u a /2 is the percentage point of the normal distribution. K. k. r. k., like any, can be used to detect the dependence of two qualitative characteristics, if only the sample elements can be ordered relative to these characteristics. If X, Y have a joint normal with the correlation coefficient p, then the relationship between K. k.r. k. and has the form:

![]()

See also Spearman rank correlation, rank test.

Lit.: Kendal M., Rank correlations, trans. from English, M., 1975; Van der Waerden B. L., Mathematical, trans. from German, M., 1960; Bolshev L. N., Smirnov N. V., Tables of mathematical statistics, M., 1965.

A. V. Prokhorov.

Mathematical encyclopedia. - M.: Soviet Encyclopedia. I. M. Vinogradov. 1977-1985.

See what "KENDALL'S RANK CORRELATION COEFFICIENT" is in other dictionaries:

English with efficient, rank correlation Kendall; German Kendalls Rangkorrelationskoeffizient. A correlation coefficient that determines the degree of agreement between the ordering of all pairs of objects according to two variables. Antinazi. Encyclopedia of Sociology, 2009 ... Encyclopedia of Sociology

KENDALL'S RANK CORRELATION COEFFICIENT- English coefficient, rank correlation Kendall; German Kendalls Rangkorrelationskoeffizient. The correlation coefficient, which determines the degree of correspondence of the ordering of all pairs of objects according to two variables... Explanatory dictionary of sociology

A measure of the dependence of two random variables (features) X and Y, based on the ranking of independent observation results (X1, Y1), . . ., (Xn,Yn). If the ranks of the X values are in natural order i=1, . . ., n,a Ri rank Y, corresponding to... ... Mathematical Encyclopedia

Correlation coefficient- (Correlation coefficient) The correlation coefficient is a statistical indicator of the dependence of two random variables. Definition of the correlation coefficient, types of correlation coefficients, properties of the correlation coefficient, calculation and application... ... Investor Encyclopedia

A dependence between random variables that, generally speaking, does not have a strictly functional character. In contrast to the functional dependence, K., as a rule, is considered when one of the quantities depends not only on the other, but also... ... Mathematical Encyclopedia

Correlation (correlation dependence) is a statistical relationship between two or more random variables (or variables that can be considered as such with some acceptable degree of accuracy). In this case, changes in the values of one or ... ... Wikipedia

Correlation- (Correlation) Correlation is a statistical relationship between two or more random variables. The concept of correlation, types of correlation, correlation coefficient, correlation analysis, price correlation, correlation of currency pairs on Forex Contents... ... Investor Encyclopedia

It is generally accepted that the beginning of S. m.v. or, as it is often called, statistics of “small n”, was founded in the first decade of the 20th century with the publication of the work of W. Gosset, in which he placed the t distribution, postulated by the one that received a little later worldwide... ... Psychological Encyclopedia

Maurice Kendall Sir Maurice George Kendall Date of birth: September 6, 1907 (1907 09 06) Place of birth: Kettering, UK Date of death ... Wikipedia

Forecast- (Forecast) Definition of forecast, tasks and principles of forecasting Definition of forecast, tasks and principles of forecasting, forecasting methods Contents Contents Definition Basic concepts of forecasting Tasks and principles of forecasting... ... Investor Encyclopedia

One factor limiting the use of tests based on the assumption of normality is sample size. As long as the sample is large enough (for example, 100 or more observations), you can assume that the sampling distribution is normal, even if you are not sure that the distribution of the variable in the population is normal. However, if the sample is small, these tests should only be used if you are confident that the variable actually has a normal distribution. However, there is no way to test this assumption in a small sample.

The use of criteria based on the assumption of normality is also limited by the measurement scale (see the chapter Elementary Concepts of Data Analysis). Statistical methods such as t-test, regression, etc. assume that the original data is continuous. However, there are situations where data are simply ranked (measured on an ordinal scale) rather than measured accurately.

A typical example is given by ratings of sites on the Internet: the first position is occupied by the site with the maximum number of visitors, the second position is occupied by the site with the maximum number of visitors among the remaining sites (among the sites from which the first site was deleted), etc. Knowing the ratings, we can say that the number of visitors to one site is greater than the number of visitors to another, but how much more cannot be said. Imagine you have 5 sites: A, B, C, D, E, which are ranked in the first 5 places. Suppose that in the current month we had the following arrangement: A, B, C, D, E, and in the previous month: D, E, A, B, C. The question is, have there been significant changes in the rankings of sites or not? In this situation, obviously, we cannot use the t-test to compare these two groups of data, and we move into the field of specific probabilistic calculations (and any statistical test contains probabilistic calculations!). We reason approximately as follows: how likely is it that the difference in the two site arrangements is due to purely random reasons, or whether this difference is too large and cannot be explained by pure chance. In these discussions, we use only ranks or permutations of sites and do not in any way use a specific type of distribution of the number of visitors to them.

Nonparametric methods are used to analyze small samples and for data measured on poor scales.

A Brief Overview of Nonparametric Procedures

Essentially, for every parametric criterion there is at least one nonparametric alternative.

In general, these procedures fall into one of the following categories:

- difference tests for independent samples;

- difference tests for dependent samples;

- assessment of the degree of dependence between variables.

In general, the approach to statistical criteria in data analysis should be pragmatic and not burdened with unnecessary theoretical considerations. With a computer running STATISTICA, you can easily apply multiple criteria to your data. Knowing about some of the pitfalls of the methods, you will choose the right solution through experimentation. The plot development is quite natural: if you want to compare the values of two variables, then you use a t-test. However, it should be remembered that it is based on the assumption of normality and equality of variances in each group. Removing these assumptions leads to nonparametric tests, which are especially useful for small samples.

The development of the t-test leads to analysis of variance, which is used when the number of groups being compared is more than two. The corresponding development of nonparametric procedures leads to nonparametric analysis of variance, although it is significantly poorer than classical analysis of variance.

To assess the dependence, or, to put it somewhat pompously, the degree of closeness of the connection, the Pearson correlation coefficient is calculated. Strictly speaking, its use has limitations associated, for example, with the type of scale in which the data is measured and the non-linearity of the relationship, so non-parametric, or so-called rank, correlation coefficients, used, for example, for ranked data, are also used as an alternative. If data are measured on a nominal scale, then it is natural to present them in contingency tables, which use the Pearson chi-square test with various variations and adjustments for accuracy.

So, essentially there are only a few types of criteria and procedures that you need to know and be able to use, depending on the specifics of the data. You need to determine which criterion should be applied in a particular situation.

Nonparametric methods are most appropriate when sample sizes are small. If there is a lot of data (for example, n >100), it often does not make sense to use nonparametric statistics.

If the sample size is very small (for example, n = 10 or less), then the significance levels for those nonparametric tests that use the normal approximation can only be considered rough estimates.

Differences between independent groups. If you have two samples (for example, men and women) that you want to compare regarding some mean value, such as mean blood pressure or white blood cell count, then you can use the independent samples t test.

Nonparametric alternatives to this test are the Wald-Wolfowitz series test, Mann-Whitney )/n, where x i - i-th value, n - number of observations. If a variable contains negative values or zero (0), the geometric mean cannot be calculated.

Harmonic mean

The harmonic mean is sometimes used to average frequencies. The harmonic mean is calculated by the formula: GS = n/S(1/x i) where GS is the harmonic mean, n is the number of observations, x i is the value of observation number i. If a variable contains zero (0), the harmonic mean cannot be calculated.

Variance and standard deviation

Sample variance and standard deviation are the most commonly used measures of variability (variation) in data. Dispersion is calculated as the sum of squared deviations of the variable values from the sample mean, divided by n-1 (but not by n). The standard deviation is calculated as the square root of the variance estimate.

Scope

The range of a variable is an indicator of variability, calculated as the maximum minus the minimum.

Quartile range

The quarterly range, by definition, is the top quartile minus the bottom quartile (75% percentile minus 25% percentile). Since the 75% percentile (upper quartile) is the value to the left of which 75% of the observations are, and the 25% percentile (lower quartile) is the value to the left of which 25% of the observations are, the quartile range is the interval around the median. which contains 50% of the observations (variable values).

Asymmetry

Skewness is a characteristic of the shape of a distribution. The distribution is skewed to the left if the skewness value is negative. The distribution is skewed to the right if the skewness is positive. The skewness of the standard normal distribution is 0. Skewness is associated with the third moment and is defined as: skewness = n × M 3 /[(n-1) × (n-2) × s 3 ], where M 3 is equal to: (x i -xaverage x) 3, s 3 - standard deviation raised to the third power, n - number of observations.

Excess

Kurtosis is a characteristic of the shape of a distribution, namely a measure of the sharpness of its peak (relative to a normal distribution, the kurtosis of which is 0). Typically, distributions with a sharper peak than the normal one have positive kurtosis; distributions whose peak is less sharp than the peak of a normal distribution have negative kurtosis. Kurtosis is associated with the fourth moment and is determined by the formula:

kurtosis = /[(n-1) × (n-2) × (n-3) × s 4 ], where M j is equal to: (x-mean x, s 4 - standard deviation to the fourth power, n - number of observations .

Presentation and pre-processing of expert assessments

Several types of assessments are used in practice:

- qualitative (often-rarely, worse-better, yes-no),

- scale ratings (value ranges 50-75, 76-90, 91-120, etc.),

Points from a given interval (from 2 to 5, 1 -10), mutually independent,

Ranked (objects are arranged by the expert in a certain order, and each is assigned a serial number - rank),

Comparative, obtained by one of the comparison methods

sequential comparison method

method of pairwise comparison of factors.

At the next step of processing expert opinions, it is necessary to evaluate the degree of agreement between these opinions.

Ratings received from experts can be considered as a random variable, the distribution of which reflects the opinions of experts about the probability of a particular choice of event (factor). Therefore, to analyze the spread and consistency of expert assessments, generalized statistical characteristics are used - averages and measures of spread:

Mean square error,

Variation range min – max,

- coefficient of variation V = average square deviation / arithm average (suitable for any type of assessment)

V i = σ i / x i avg

For evaluation similarity measures and opinions each pair of experts A variety of methods can be used:

association coefficients, with the help of which the number of matching and non-matching answers is taken into account,

inconsistency coefficients expert opinions,

All these measures can be used either to compare the opinions of two experts, or to analyze the relationship between a series of assessments on two characteristics.

Spearman's paired rank correlation coefficient:

where n is the number of experts,

c k – the difference between the estimates of the i-th and j-th experts for all T factors

Kendall's rank correlation coefficient (concordance coefficient) gives an overall assessment of the consistency of the opinions of all experts on all factors, but only for cases where rank estimates were used.

It has been proven that the value of S, when all experts give the same assessments of all factors, has a maximum value equal to

where n is the number of factors,

m – number of experts.

The concordance coefficient is equal to the ratio

Moreover, if W is close to 1, then all experts gave fairly consistent estimates, otherwise their opinions are not consistent.

The formula for calculating S is given below:

where r ij are the ranking estimates of the i-th factor by the j-th expert,

r avg is the average rank over the entire assessment matrix and is equal to

And therefore the formula for calculating S can take the form:

If individual assessments from one expert coincide, and they were standardized during processing, then another formula is used to calculate the concordance coefficient:

where T j is calculated for each expert (if his assessments were repeated for different objects) taking into account repetitions according to the following rules:

where t j is the number of groups of equal ranks for the j-th expert, and

h k is the number of equal ranks in the k-th group of related ranks of the j-th expert.

EXAMPLE. Let 5 experts on six factors answer the ranking as shown in Table 3:

Table 3 - Experts' answers

| Experts | O1 | O2 | O3 | O4 | O5 | O6 | Sum of ranks by expert |

| E1 | |||||||

| E2 | |||||||

| E3 | |||||||

| E4 | |||||||

| E5 |

Due to the fact that we did not obtain a strict ranking (the experts’ assessments are repeated, and the sums of ranks are not equal), we will transform the assessments and obtain the associated ranks (Table 4):

Table 4 – Associated ranks of expert assessments

| Experts | O1 | O2 | O3 | O4 | O5 | O6 | Sum of ranks by expert |

| E1 | 2,5 | 2,5 | |||||

| E2 | |||||||

| E3 | 1,5 | 1,5 | 4,5 | 4,5 | |||

| E4 | 2,5 | 2,5 | 4,5 | 4,5 | |||

| E5 | 5,5 | 5,5 | |||||

| Sum of ranks for an object | 7,5 | 9,5 | 23,5 | 29,5 |

Now let’s determine the degree of agreement between expert opinions using the concordance coefficient. Since the ranks are related, we will calculate W using the formula (**).

Then r av =7*5/2=17.5

S = 10 2 +8 2 +4.5 2 +4.5 2 +6 2 +12 2 = 384.5

Let's move on to the calculations of W. To do this, let's calculate the values of T j separately. In the example, the ratings are specially selected in such a way that each expert has repeating ratings: the 1st has two, the second has three, the third has two groups of two ratings, and the fourth and fifth have two identical ratings. From here:

T 1 = 2 3 – 2 = 6 T 5 = 6

T 2 = 3 3 – 3 = 24

T 3 = 2 3 –2+ 2 3 –2 = 12 T 4 = 12

We see that the consistency of expert opinions is quite high and we can move on to the next stage of the study - justification and adoption of the solution alternative recommended by the experts.

Otherwise, you must return to steps 4-8.

To calculate the Kendall rank correlation coefficient r k it is necessary to rank the data according to one of the characteristics in ascending order and determine the corresponding ranks for the second characteristic. Then, for each rank of the second attribute, the number of subsequent ranks greater in value than the taken rank is determined, and the sum of these numbers is found.

Kendall's rank correlation coefficient is given by

Where R i– number of ranks of the second variable, starting from i+1, the value of which is greater than the value i-th rank of this variable.

There are tables of percentage points of coefficient distribution r k, allowing you to test the hypothesis about the significance of the correlation coefficient.

For large sample sizes, critical values r k are not tabulated, and they have to be calculated using approximate formulas, which are based on the fact that under the null hypothesis H 0: r k=0 and larger n random variable

For large sample sizes, critical values r k are not tabulated, and they have to be calculated using approximate formulas, which are based on the fact that under the null hypothesis H 0: r k=0 and larger n random variable

distributed approximately according to the standard normal law.

40. Dependence between traits measured on a nominal or ordinal scale

Often the task arises of checking the independence of two characteristics measured on a nominal or ordinal scale.

Let some objects have two characteristics measured X And Y with the number of levels r And s respectively. It is convenient to present the results of such observations in the form of a table called a contingency table of characteristics.

In the table u i(i = 1, ..., r) And v j (j= 1, ..., s) – values accepted by the characteristics, value n ij– the number of objects out of the total number of objects that have the attribute X accepted the value u i, and the sign Y- meaning v j

Let's introduce the following random variables:

Let's introduce the following random variables:

u i

– the number of objects that have a value v j

In addition, there are obvious equalities

Discrete random variables X And Y independent if and only if

for all couples i, j

Therefore, the hypothesis about the independence of discrete random variables X And Y can be written like this:

As an alternative, as a rule, the hypothesis is used

The validity of the hypothesis H 0 should be judged on the basis of sample frequencies n ij contingency tables. In accordance with the law of large numbers when n→∞ relative frequencies are close to the corresponding probabilities:

The validity of the hypothesis H 0 should be judged on the basis of sample frequencies n ij contingency tables. In accordance with the law of large numbers when n→∞ relative frequencies are close to the corresponding probabilities:

Statistics are used to test the hypothesis H 0

which, if the hypothesis is true, has a distribution χ 2 s rs − (r + s− 1) degrees of freedom.

Independence criterion χ 2 rejects the hypothesis H 0 with significance level α if:

41. Regression analysis. Basic concepts of regression analysis

To mathematically describe the statistical relationships between the studied variables, the following problems should be solved:

ü select a class of functions in which it is advisable to look for the best (in a certain sense) approximation of the dependence of interest;

ü find estimates of the unknown values of the parameters included in the equations of the desired dependence;

ü establish the adequacy of the resulting equation for the desired relationship;

ü identify the most informative input variables.

The totality of the listed tasks is the subject of regression analysis research.

The regression function (or regression) is called the dependence mathematical expectation one random variable from the value taken by another random variable, forming with the first a two-dimensional system of random variables.

Let there be a system of random variables ( X,Y), then the regression function Y on X

And the regression function X on Y

Regression functions f(x) And φ (y), are not mutually reversible, unless the relationship between X And Y is not functional.

In case n-dimensional vector with coordinates X 1 , X 2 ,…, Xn one can consider the conditional mathematical expectation for any component. For example, for X 1

called regression X 1 per X 2 ,…, Xn.

To fully define the regression function, it is necessary to know the conditional distribution of the output variable for fixed values of the input variable.

Since in a real situation such information is not available, they are usually limited to searching for a suitable approximating function f a(x) For f(x), based on statistical data of the form ( x i, y i), i = 1,…, n. This data is the result n independent observations y 1 ,…, y n random variable Y for the values of the input variable x 1 ,…, x n, while in regression analysis it is assumed that the values of the input variable are specified exactly.

The problem of choosing the best approximating function f a(x), being the main one in regression analysis, and does not have formalized procedures for its solution. Sometimes the choice is determined based on the analysis of experimental data, more often from theoretical considerations.

If the regression function is assumed to be sufficiently smooth, then the function approximating it f a(x) can be represented as a linear combination of a certain set of linearly independent basis functions ψk(x), k = 0, 1,…, m−1, i.e. in the form

Where m– number of unknown parameters θk(in the general case, the quantity is unknown, refined during the construction of the model).

Such a function is linear in its parameters, so in the case under consideration we speak of a regression function model that is linear in its parameters.

Then the task of finding the best approximation for the regression line f(x) reduces to finding such parameter values at which f a(x;θ) is most adequate to the available data. One of the methods that allows you to solve this problem is the least squares method.

42. Least squares method

Let the set of points ( x i, y i), i= 1,…, n located on a plane along some straight line

Then as a function f a(x), which approximates the regression function f(x) = M [Y|x] it is natural to take a linear function of the argument x:

Then as a function f a(x), which approximates the regression function f(x) = M [Y|x] it is natural to take a linear function of the argument x:

That is, the basis functions chosen here are ψ 0 (x)≡1 and ψ 1 (x)≡x. This type of regression is called simple linear regression.

If the set of points ( x i, y i), i= 1,…, n located along some curve, then as f a(x) it’s natural to try to choose a family of parabolas

This function is nonlinear in parameters θ 0 and θ 1, however, by means of a functional transformation (in this case logarithm) it can be reduced to new feature f' a(x) linear in parameters:

43. Simple Linear Regression

The simplest model regression is a simple (univariate, one-factor, paired) linear model, which has the following form:

Where ε i– random variables (errors) that are uncorrelated with each other, having zero mathematical expectations and identical variances σ 2 , a And b– constant coefficients (parameters) that need to be estimated from the measured response values y i.

To find parameter estimates a And b linear regression, determining the straight line that best satisfies the experimental data:

The least squares method is used.

According to least squares method

parameter estimates a And b found from the condition of minimizing the sum of squared deviations of values y i vertically from the “true” regression line:

According to least squares method

parameter estimates a And b found from the condition of minimizing the sum of squared deviations of values y i vertically from the “true” regression line:

Let ten observations of a random variable be made Y for fixed values of the variable X

To minimize D let us equate to zero the partial derivatives with respect to a And b:

As a result, we obtain the following system of equations for finding estimates a And b:

Solving these two equations gives:

Expressions for parameter estimates a And b can also be represented as:

Then the empirical equation of the regression line Y on X can be written as:

Unbiased variance estimator σ

2 value deviations y i from the fitted straight regression line is given by

Unbiased variance estimator σ

2 value deviations y i from the fitted straight regression line is given by

Let's calculate the parameters of the regression equation

Thus, the regression line looks like:

And the estimate of the variance of deviations of values y i from the fitted straight regression line

44. Checking the significance of the regression line

Found estimate b≠ 0 may be a realization of a random variable whose mathematical expectation is equal to zero, that is, it may turn out that there is actually no regression dependence.

To deal with this situation, you should test the hypothesis H 0: b= 0 with competing hypothesis H 1: b ≠ 0.

Testing the significance of a regression line can be done using analysis of variance.

Consider the following identity:

Magnitude y i− ŷi = ε i is called the remainder and is the difference between two quantities:

ü deviation of the observed value (response) from the overall average response;

ü deviation of the predicted response value ŷi from the same average

The written identity can be written in the form

By squaring both sides and summing over i, we get:

Where the quantities are named:

Where the quantities are named:

the complete (total) sum of squares SC n, which is equal to the sum of squared deviations of observations relative to the average value of observations

the sum of squares due to the regression of the SC p, which is equal to the sum of squared deviations of the values of the regression line relative to the average of observations.

residual sum of squares SC 0 . which is equal to the sum of squared deviations of observations relative to the values of the regression line

residual sum of squares SC 0 . which is equal to the sum of squared deviations of observations relative to the values of the regression line

Thus, the spread Y-kov relative to their mean can be attributed to some extent to the fact that not all observations lie on the regression line. If this were the case, then the sum of squares relative to the regression would be zero. It follows that the regression will be significant if the sum of squares of SC p is greater than the sum of squares of SC 0.

Calculations to test the significance of regression are carried out in the following ANOVA table

If errors ε i are distributed according to the normal law, then if the hypothesis H 0 is true: b= 0 statistics:

distributed according to Fisher's law with the number of degrees of freedom 1 and n−2.

The null hypothesis will be rejected at significance level α if the calculated value of the statistic F will be greater than the α percentage point f 1;n−2;α Fisher distributions.

45. Checking the adequacy of the regression model. Residual method

The adequacy of the constructed regression model means that no other model provides a significant improvement in predicting the response.

If all response values are obtained at different meanings x, i.e. there are no several response values obtained at the same x i, then only limited testing of the adequacy of the linear model can be carried out. The basis for such a check is the balances:

Deviations from the established pattern:

Since X– one-dimensional variable, points ( x i, d i) can be depicted on a plane in the form of a so-called residual graph. This representation sometimes makes it possible to detect some kind of pattern in the behavior of residues. In addition, residual analysis allows us to analyze the assumption regarding the error distribution law.

In the case when the errors are distributed according to the normal law and there is an a priori estimate of their variance σ 2 (an assessment obtained on the basis of previously performed measurements), then a more accurate assessment of the adequacy of the model is possible.

By using F-Fisher's test can be used to check whether the residual variance is significant s 0 2 differs from the a priori estimate. If it is significantly greater, then there is inadequacy and the model should be revised.

If the a priori estimate σ 2 no, but response measurements Y repeated two or more times with the same values X, then these repeated observations can be used to obtain another estimate σ 2 (the first is the residual variance). Such an estimate is said to represent a “pure” error, since if x identical for two or more observations, then only random changes can affect the results and create scatter between them.

The resulting estimate turns out to be a more reliable estimate of the variance than estimates obtained by other methods. For this reason, when planning experiments, it makes sense to perform experiments with repetitions.

Let's assume that there is m different meanings X : x 1 , x 2 , ..., x m. Let for each of these values x i available n i response observations Y. The total observations are:

Let's assume that there is m different meanings X : x 1 , x 2 , ..., x m. Let for each of these values x i available n i response observations Y. The total observations are:

Then the simple linear regression model can be written as:

Let's find the variance of “pure” errors. This variance is the pooled variance estimate σ

2 if we imagine the response values y ij at x = x i as sample volume n i. As a result, the variance of “pure” errors is equal to:

Let's find the variance of “pure” errors. This variance is the pooled variance estimate σ

2 if we imagine the response values y ij at x = x i as sample volume n i. As a result, the variance of “pure” errors is equal to:

This variance serves as an estimate σ 2 regardless of whether the fitted model is correct.

Let us show that the sum of squares of the “pure errors” is part of the residual sum of squares (the sum of squares included in the expression for the residual variance). Remaining for j th observation at x i can be written as:

If we square both sides of this equation and then sum them over j and by i, then we get:

On the left in this equality is the residual sum of squares. The first term on the right side is the sum of squares of “pure” errors, the second term can be called the sum of squares of inadequacy. The last amount has m−2 degrees of freedom, hence the variance of inadequacy

The criterion statistic for testing the hypothesis H 0: the simple linear model is adequate, against the hypothesis H 1: the simple linear model is inadequate, is a random variable

The criterion statistic for testing the hypothesis H 0: the simple linear model is adequate, against the hypothesis H 1: the simple linear model is inadequate, is a random variable

If the null hypothesis is true, the value F has a Fisher distribution with degrees of freedom m−2 and n−m. The linearity hypothesis of the regression line should be rejected at the significance level α if the resulting statistic value is greater than the α percentage point of the Fisher distribution with degrees of freedom m−2 and n−m.

46. Checking the adequacy of the regression model (see 45). Analysis of variance

47. Checking the adequacy of the regression model (see 45). Determination coefficient

Sometimes a sample coefficient of determination is used to characterize the quality of a regression line R 2, showing what part (share) the sum of squares due to regression, SC p, makes up in the total sum of squares SC p:

Sometimes a sample coefficient of determination is used to characterize the quality of a regression line R 2, showing what part (share) the sum of squares due to regression, SC p, makes up in the total sum of squares SC p:

The closer R 2 to unity, the better the regression approximates the experimental data, the closer the observations are to the regression line. If R 2 = 0, then changes in the response are entirely due to the influence of unaccounted factors, and the regression line is parallel to the axis x-s. In the case of simple linear regression, the coefficient of determination R 2 is equal to the square of the correlation coefficient r 2 .

The maximum value of R 2 =1 can be achieved only in the case when observations were carried out at different x values. If the data contains repeated experiments, then the value of R 2 cannot reach unity, no matter how good the model is.

48. Confidence intervals for simple linear regression parameters

Just as the sample mean is an estimate of the true mean (the population mean), so are the sample parameters of a regression equation a And b- nothing more than estimates of true regression coefficients. Different samples will produce different estimates of the mean, just as different samples will produce different estimates of regression coefficients.

Assuming that the error distribution law ε i are described by a normal law, parameter estimation b will have a normal distribution with the parameters:

Assuming that the error distribution law ε i are described by a normal law, parameter estimation b will have a normal distribution with the parameters:

Since the parameter estimate a is a linear combination of independent normally distributed quantities, it will also have a normal distribution with expectation and variance:

Since the parameter estimate a is a linear combination of independent normally distributed quantities, it will also have a normal distribution with expectation and variance:

In this case, (1 − α) confidence interval for estimating the dispersion σ 2 taking into account that the ratio ( n−2)s 0 2 /σ 2 distributed according to law χ 2 with degrees of freedom n−2 will be determined by the expression

49. Confidence intervals for the regression line. Confidence interval for dependent variable values

Usually we do not know the true values of regression coefficients A And b. We only know their estimates. In other words, the true regression line may be higher or lower, steeper or flatter, than the one built from sample data. We calculated confidence intervals for the regression coefficients. You can also calculate the confidence region for the regression line itself.

Let for simple linear regression we need to construct (1− α

) confidence interval for the mathematical expectation of the response Y at value X = X 0 . This mathematical expectation is equal to a+bx 0 and its score

Let for simple linear regression we need to construct (1− α

) confidence interval for the mathematical expectation of the response Y at value X = X 0 . This mathematical expectation is equal to a+bx 0 and its score

Because, then.

The resulting estimate of the mathematical expectation is a linear combination of uncorrelated normally distributed values and therefore also has a normal distribution centered at the point of the true value of the conditional mathematical expectation and variance

The resulting estimate of the mathematical expectation is a linear combination of uncorrelated normally distributed values and therefore also has a normal distribution centered at the point of the true value of the conditional mathematical expectation and variance

Therefore, the confidence interval for the regression line at each value x 0 can be represented as

As you can see, the minimum confidence interval is obtained when x 0 equal to the average value and increases as x 0 “moves away” from the average in any direction.

To obtain a set of joint confidence intervals suitable for the entire regression function, throughout its entire length, in the above expression instead tn −2,α

/2 must be substituted

To obtain a set of joint confidence intervals suitable for the entire regression function, throughout its entire length, in the above expression instead tn −2,α

/2 must be substituted